Organizations typically consider multi-datacenter setups for two main reasons:

- Business expansion into different geographical regions

- Enhanced reliability requirements that take precedence over pure performance

When implementing a multi-datacenter setup, there are three critical aspects to consider during both normal operations and disaster scenarios:

- Consistency: Will data be lost if a datacenter fails and operations switch to another datacenter?

- Availability: Will a datacenter failure cause partitions to go offline? This can happen due to:

- No in-sync replicas being available

- Zookeeper cluster failing to form a quorum and elect new leaders

- Broker unavailability

- Performance: What are the expected latency and throughput characteristics?

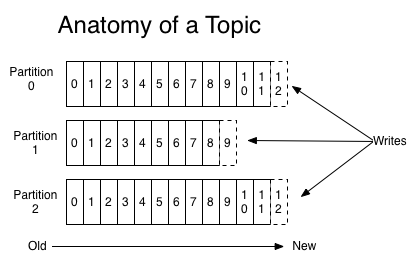

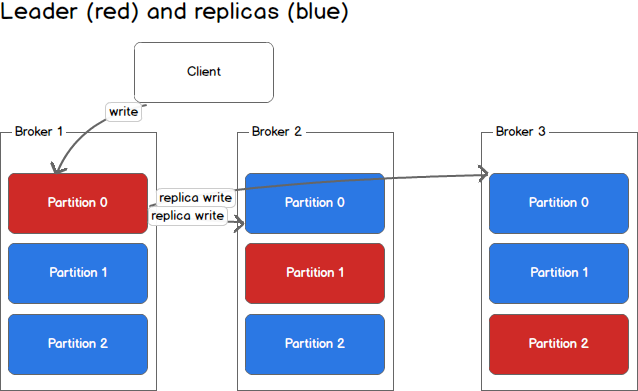

These considerations are closely tied to how producers receive confirmation of message writes, which is controlled by the acks parameter:

-1/all: All In-Sync Replicas (ISR) must report back and acknowledge the commit - slowest but most consistent0: Messages are considered written as soon as the broker receives them (before commit) - fastest but least consistent1: Messages are confirmed when the leader commits them - most common setting balancing speed and data security

Before discussing different setups, here are some key abbreviations used throughout this article:

nr-dc: Number of data centers

nr-rep: (default.replication.factor) Number of replicas

ISR: In-sync replicas

min-ISR: (min.insync.replicas) Minimum in-sync replicas

All scenarios discussed below assume producer setting acks=1 and topics created with default settings.

Two data centers and 2.5 data centers

Note: 2.5 DC is basically 2DC+1 zookeeper on the third DC.

To set a tone here, two data centers set up for Kafka is NOT ideal!

There will be trade-offs for two dc setups. before continue you need to understand the concept of CAP theorem.

According to LinkedIn engineers, Kafka is a CA system. If there is a network partition happens, you will have either split brian situation or failed cluster. It is a choice when you have to shut down part of the cluster to prevent split brain.

When comes to two dc setups, you can not even achieve CA across the cluster either. Having said that, you can still tune individual topics to have either consistency OR availability.

Stretched cluster

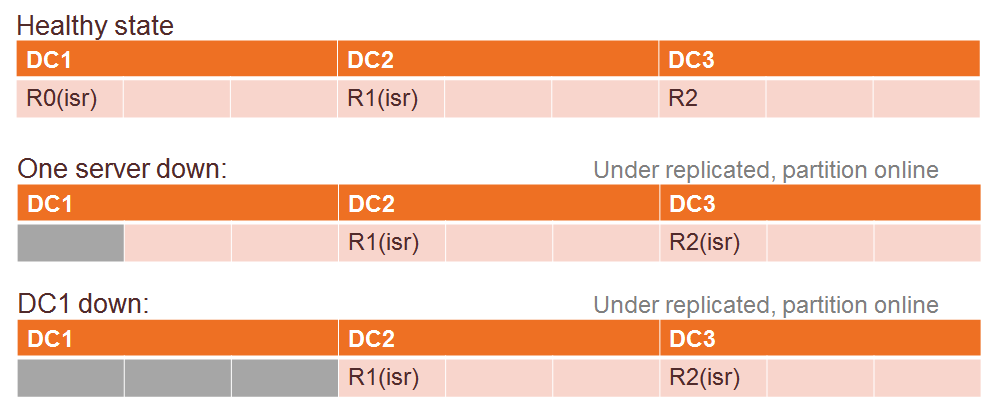

With rack awareness, two data centers joined as one cluster. The rack awareness feature spreads replicas of the same partition across different racks/data centers. This extends the guarantees Kafka provides for broker-failure to cover rack-failure, limiting the risk of data loss should all the brokers on a rack/data center fail at once.

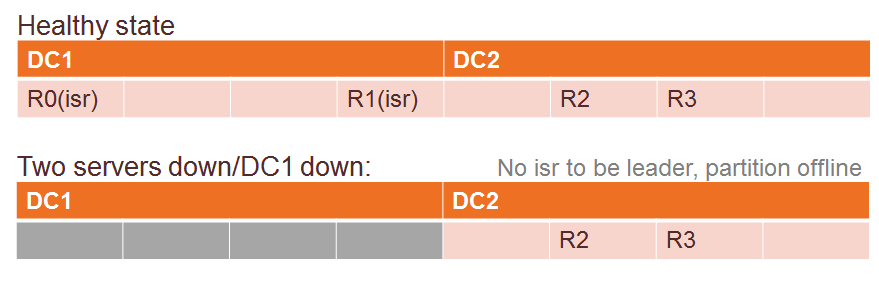

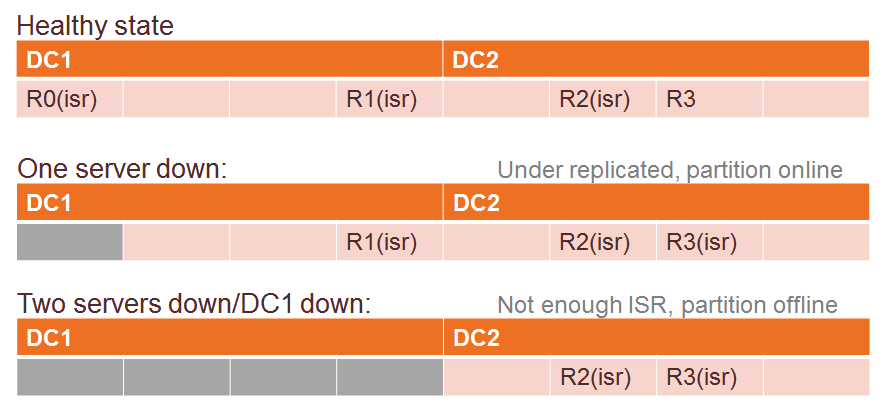

Consistency first configuration

min-ISR > round(nr-rep/nr-dc)

The minimum in-sync replica is larger than replicas assigned per data center.

Consistency

With this setup, it is guaranteed to have at least ONE in sync replica on the other data center. When there is one data center offline, the data is already secured on the other data center.

Availability

One data center goes down will render partitions goes offline because it just won’t have enough in-sync replicas (even at least one replica is in-sync).

The way to re-enable the cluster is to either

- Change the min-ISR setting to less than replica per data center, a manual intervention.

- Re-assign partition allocation, also a manual intervention.

In a single tenant environment, producers need to able to buffer messages locally until the cluster is back functioning.

In a multi-tenant environment, there will probably message lost.

Whichever partition leader you are writing to will need to replicate to the other data center. Your writing latency will be the sum of normal latency plus latency between data centers.

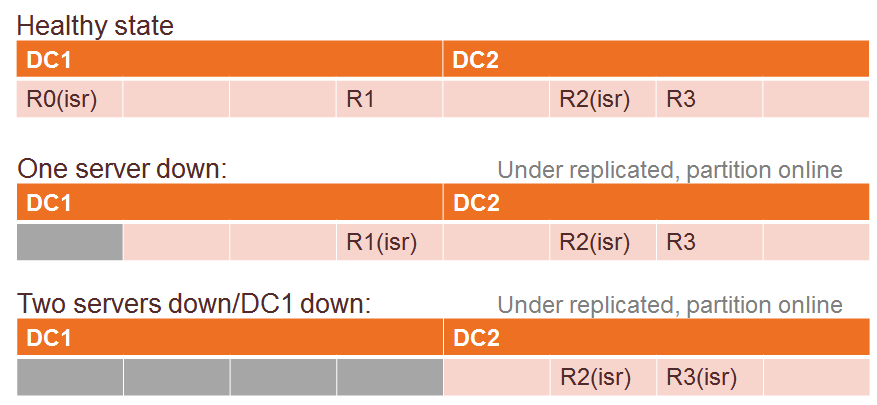

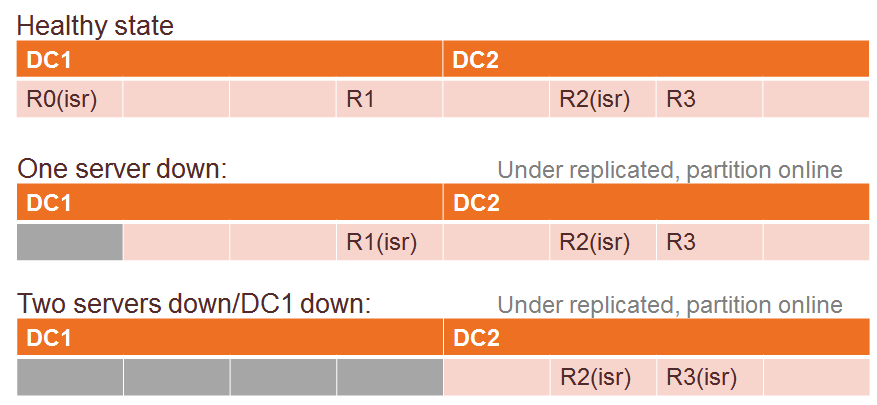

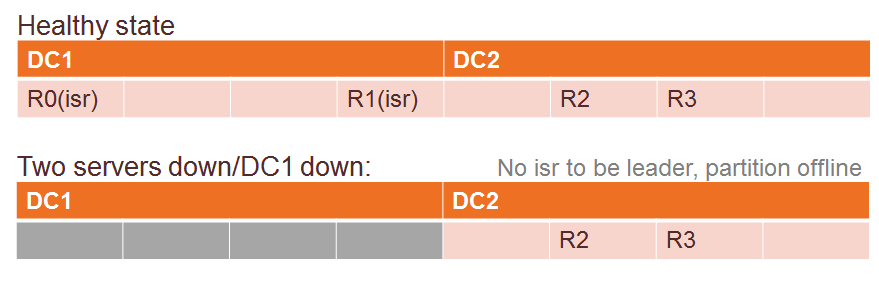

Availability first configuration

nr-rep – min-ISR >= nr-rep/nr-dc

There are more nodes can be down at the same time(nr-rep – min-ISR) then the number of replicas per data center.

There are actually two cases here:

- ISRs reside in the same data center, surprise! rack awareness does not honor ISR.

- ISRs spread out to different data centers.

Since there will be so many partitions in the cluster, you won’t avoid the first case anyway.

Why this is important? because by default, non-ISR cannot be elected as leader replica, if there is no ISR available, the partition goes offline.

To make this work, there is another piece to this puzzle: ```unclean.leader.election.enable=true````.

Here is what happens when one data center fails.

Consistency

This setting will allow followers to become leaders and you will have data missing for records did not sync in time and there definitely will be some. When old leaders come back online, that will bring back the missing records and maybe create duplicates as well.

Availability

This setup allows one data center goes down entirely.

Less min-ISR means better performance, especially data not necessarily synced across to the other data center before responding to the producer.

2.5 data centers

As I mentioned before, to perform any failover recovery you need zookeeper quorum and we just mentioned partitions in previous setups.

In previous setups, you need to form a zookeeper cluster (odd nodes) across two data centers that means one in dc1 two in dc2 or two in dc1 and three in dc2.

If one data center with more zookeeper nodes went down it brings the zookeeper cluster with it, because zookeeper needs a majority to perform election.

With 1/3/5 zookeeper(s) resides on the third data center greatly reduced the risk on the zookeeper cluster.

Replicated cluster

From producer or consumer’s perspective, there are two clusters and data is synced with an almost guaranteed delay between them.

There are a set of popular tools:

- Mirror maker - Apache

- Proprietary Replicator - Confluent / Uber /IBM

Why? because Mirrormaker from Kafka is far from perfect.

- It replicates data but topic configuration.

- Takes a long time and sometimes give up rebalance when mirroring clusters with a lot of partitions. This effects every time you need to add a new topic as well.

Active-Active and Active-Passive are the two concepts to replicated clusters

AA: Producers and consumers may using both clusters to produce and consume data. With delays in between, the order is not guaranteed outside of the data center.

AP: The other cluster is hot standby, and switching to the other cluster needs reconfiguration on the client sides.

Consistency

Well there will be data cannot be synced in time, and that creates inconsistency.

Availability

With the active-active design, you can put both clusters into bootstrap server list in theory(and not recommended) but that will sacrifice ordering and a bit performance as well, since you may be connecting to the server that is further away geographically.

Other than the case described above, if there is a data center offline, you need to do a manual recover and switch to another cluster.

I think this may be one of the biggest advantages. Clients can choose to talk to the cluster geographically closer.

Three data centers or more

min-ISR > round(nr-rep/nr-dc) same formula as consistency first setup.

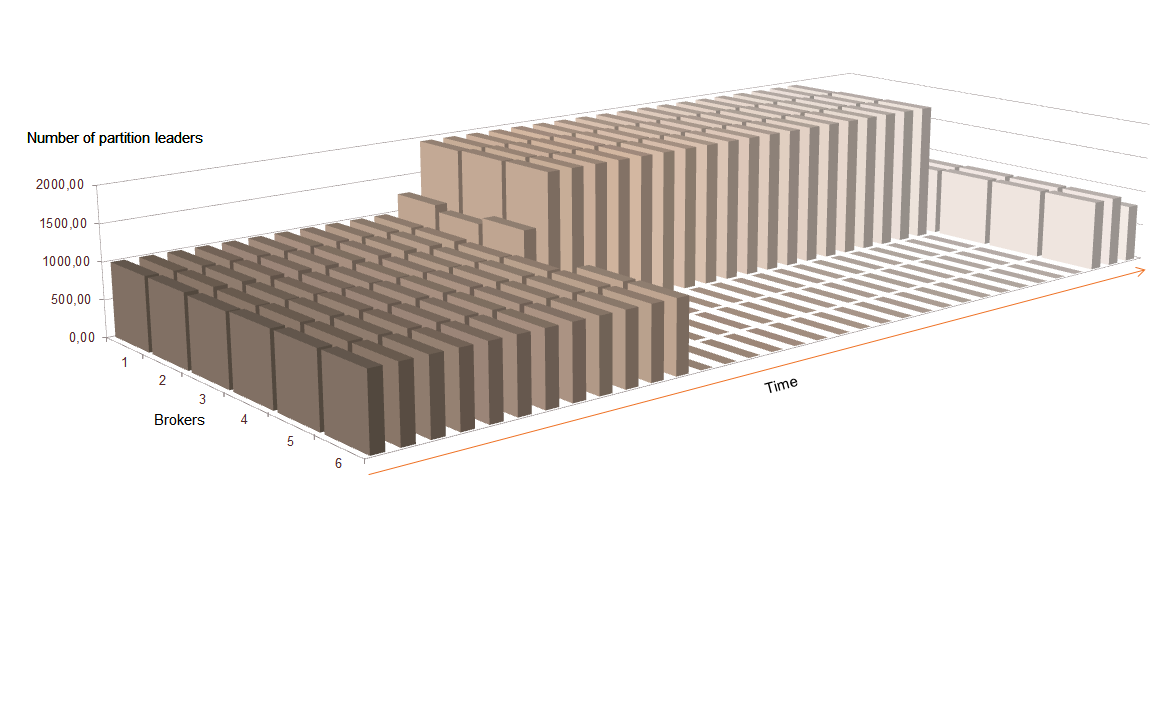

A stretched three data centers cluster is, in my opinion, the best setup. Consistency and availability can be achieved with little effort.

nr-rep = nr-dc * n

min-ISR = n+1

Where n=1/2/3… and n+1 <= number of nodes per data center.

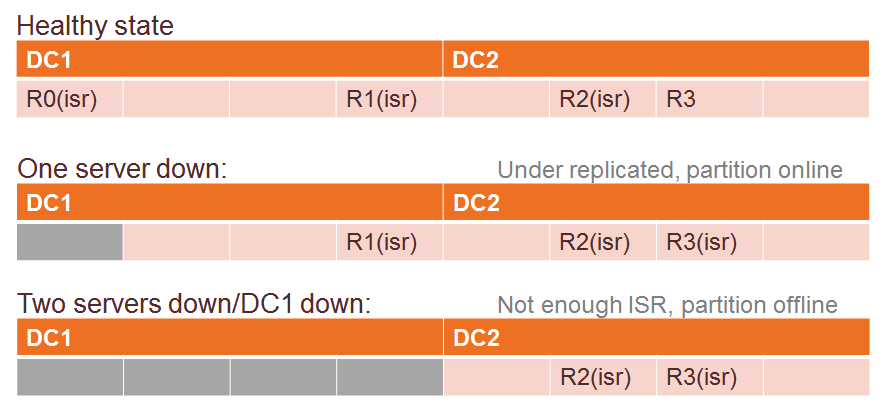

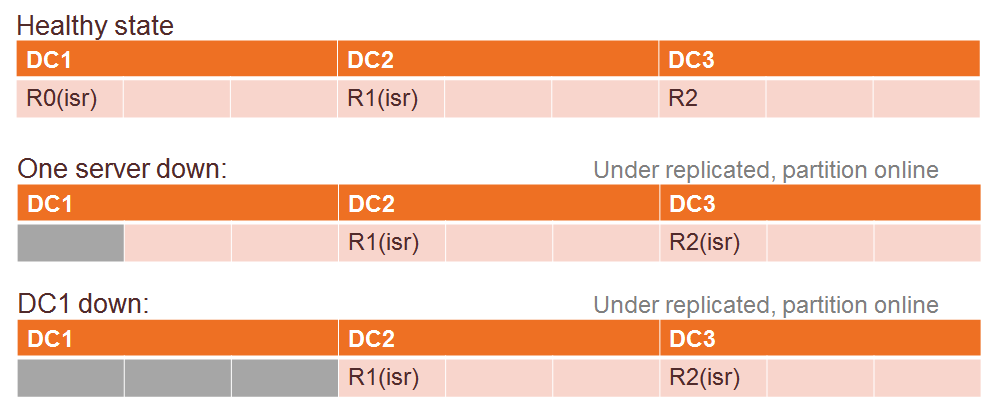

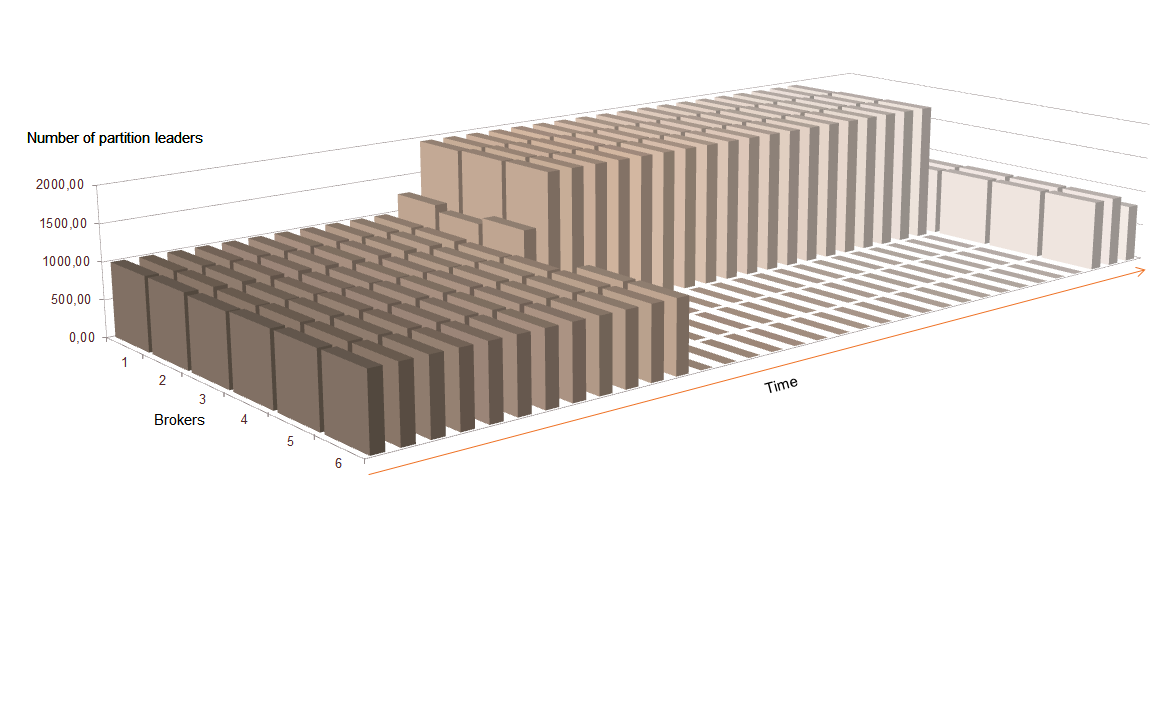

Here is a simulation for 3 replicas and min-ISR=2 setup:

Consistency

When a record is delivered, it is guaranteed there is a replica lives on another data center.

Availability

It can suffer a total loss of ONE data center and still available to read and write.

Same performance attribute as two data centers stretched cluster. All requests have to add latency to sync replica to another data center.

Summary

With one data center, Kafka can achieve consistency and availability on the local level. When comes to multi-datacenter setup you can prevent incidents that happen once every few years.

Although data center failure is rare, it is important to feel safe and prepared for such situations.

Choose and implement a solution to your needs and remember that, you have the chance to customize topics individually as most of the configurations are per topic. If it is possible, go for the three data center setup, it can achieve both consistency and availability on the cluster level with little overhead.

I am interested in site reliability topics in general, let me know your thoughts!

References